Smart Cane

Authors: Ahsan Habib, Ahsan Hasib

Description:

This projects aim to help blind people to navigate better to do their daily day-to-day work. It has a built-in music player that can play music from music libraries. Help him walk using a sonar sensor and keep track of the surroundings. It can read out nearby visible text, and instructions on command. Then there is the machine vision feature that can detect cars and pedestrians in the camera's frame and then give audio instructions to the user.

Dev Dependencies

Repo: GitHub

Languages used: Python, C++

Frameworks, Tools and Libraries: Opencv, Pillow, Pygame, Pytesseract, Espeak

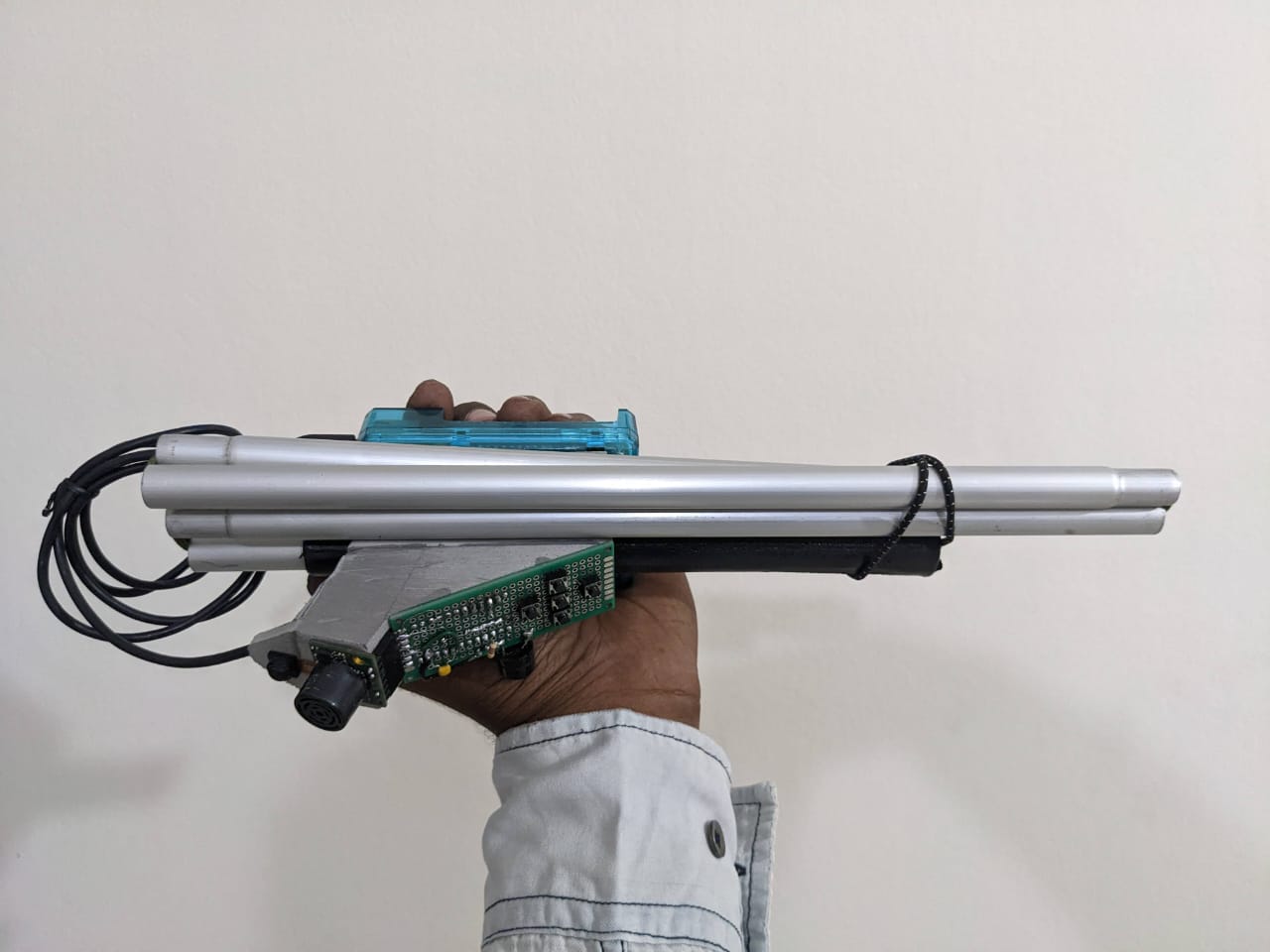

Devices used: Raspberry Pi, USB WebCam, Wired Headphones, Sonar Sensor, Arduino Pro Micro.

Features:

1. Music Player

2. Object Character Recognition

3. Walking Helper

4. Image Detection

Music Player:-

It has a music library location it can play, pause and navigate to previous and next music. The music is turned off during walking mode.

OCR:-

This device does Optical Character Recognization(OCR) using Tesseract and can play audio text using the Espeak text-to-speech engine.

Walking Helper:-

Using the sonar sensor it detects the distance of an object and beeps according to the distance the more close the more denser beeping.

Image Detection:-

First, capture an image then use machine vision to detect pedestrians and cars. Then segment the whole frame into a 3x3 matrix and speaks out the position of cars and pedestrians according to the matrix(left, centre, right | top, middle, right).

This project was Featured on Robi

Images